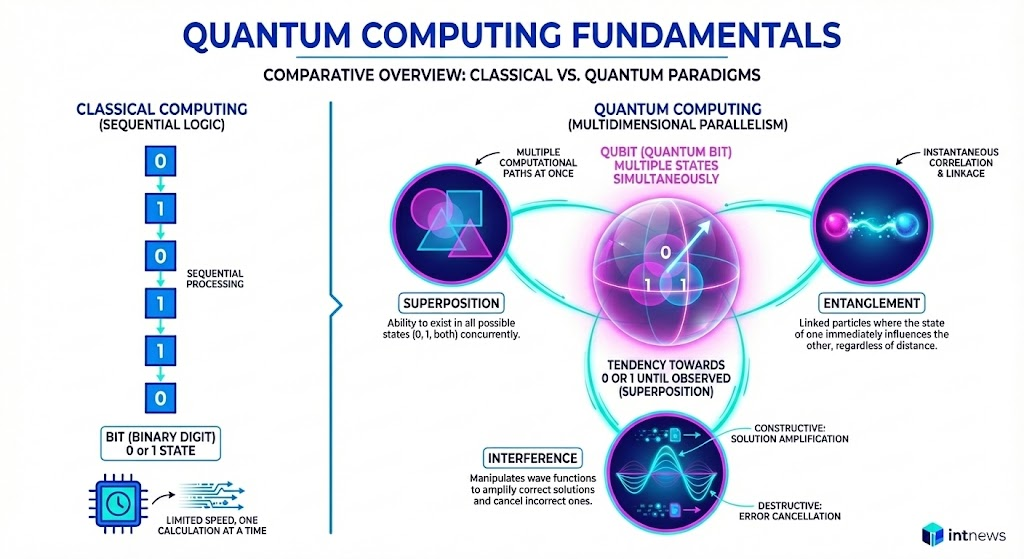

Quantum Computing is an advanced computing paradigm that harnesses quantum mechanics phenomena, such as superposition and entanglement, to process information in ways impossible for classical computers. While traditional supercomputers think sequentially, quantum computers operate in a multidimensional space, promising to solve complex mathematical problems in seconds rather than millennia.

Key Takeaways

- Fundamental Unit: Qubit (Quantum Bit) instead of Bit.

- Power: Exponential growth in computing power as qubits increase.

- Impact: Revolution in cryptography, materials science, and pharmacology.

What is Quantum Computing (and what are Qubits)

To understand Quantum Computing, one must abandon traditional binary logic. Classical computers use bits as the minimum unit of information, which can take a value of either 0 or 1, like a switch turned on or off.

The quantum computer, instead, uses Qubits (Quantum Bits). Thanks to a principle of quantum physics, a qubit can exist in a state of 0, 1, or in a “superposition” of both states simultaneously. This allows the machine to perform a massive number of calculations in parallel, no longer sequentially. As defined by NIST and IBM Research, this capability allows managing computational spaces that grow exponentially with the addition of each new qubit.

How a quantum computer works

The operation of these machines is based on the precise manipulation of subatomic particles (such as electrons or photons) through three fundamental mechanical principles:

- Superposition: As mentioned, this allows qubits to represent multiple possible combinations simultaneously.

- Entanglement: This is a phenomenon where two qubits become inextricably linked; the state of one instantly influences the other, even if physically separated. This creates a single, extremely powerful computing “web”.

- Interference: The computer uses quantum interference to amplify correct solutions (constructive interference) and cancel out wrong ones (destructive interference) during the processing workflow.

Approfondisci con la nostra guida sull’Intelligenza Artificiale Generativa.

Concrete applications of Quantum Computing

The adoption of Quantum Computing is not intended to make our smartphones faster, but to tackle specific calculations currently intractable (NP-hard problems):

- Chemistry and Pharmacology: Simulating complex molecular interactions to discover new drugs without years of lab testing (e.g., protein folding).

- Financial Optimization: Calculating risk scenarios on global portfolios in real-time (Quantum Monte Carlo method).

- Cryptography: Shor’s algorithm has theoretically demonstrated that a sufficiently powerful quantum computer could break current RSA security standards, pushing towards Post-Quantum Cryptography.

State of the art and future challenges

We are currently in the NISQ (Noisy Intermediate-Scale Quantum) era. Today’s quantum processors, such as those developed by Google (Sycamore) or IBM (Eagle/Osprey), are powerful but “noisy”: qubits are extremely sensitive to environmental interference (heat, electromagnetic waves) which cause calculation errors (decoherence).

The main engineering challenge of the coming years is Quantum Error Correction: creating stable systems with thousands of error-corrected “logical qubits” to move from scientific experimentation to large-scale commercial application.