NVIDIA has recently launched its new series of artificial intelligence-based personal computers: the Blackwell DGX. This new product marks a milestone in the evolution of computing infrastructures for AI (Artificial Intelligence), deep learning and machine learning applications, and is designed to meet the needs of researchers, Engineers and developers looking for unprecedented performance and scalability.

Blackwell Architecture: The new frontier of computational power

At the heart of the Blackwell DGX we find the Blackwell architecture, the new generation of GPU (Graphics Processing Unit) developed by NVIDIA, successor to the previous Ampere architecture. Blackwell is designed to address the computational challenges of artificial intelligence, simulation and high-performance computing (HPC) in a highly efficient way.

Blackwell GPUs are built using advanced 7nm technology and are optimized to run AI algorithms and deep learning models at an unprecedented speed. These GPUs support the NVIDIA CUDA parallel computing framework and feature the new version of the Tensor Cores inference engine, which accelerates deep learning workloads, Particularly useful for training and inference of advanced AI models.

In addition, Blackwell GPUs are compatible with NVIDIA NVLink, a high-speed interconnect technology that allows multiple GPUs to be linked together to scale computing capabilities linearly, Significantly increasing the computing power available for distributed AI applications.

Computing power in personal computer format

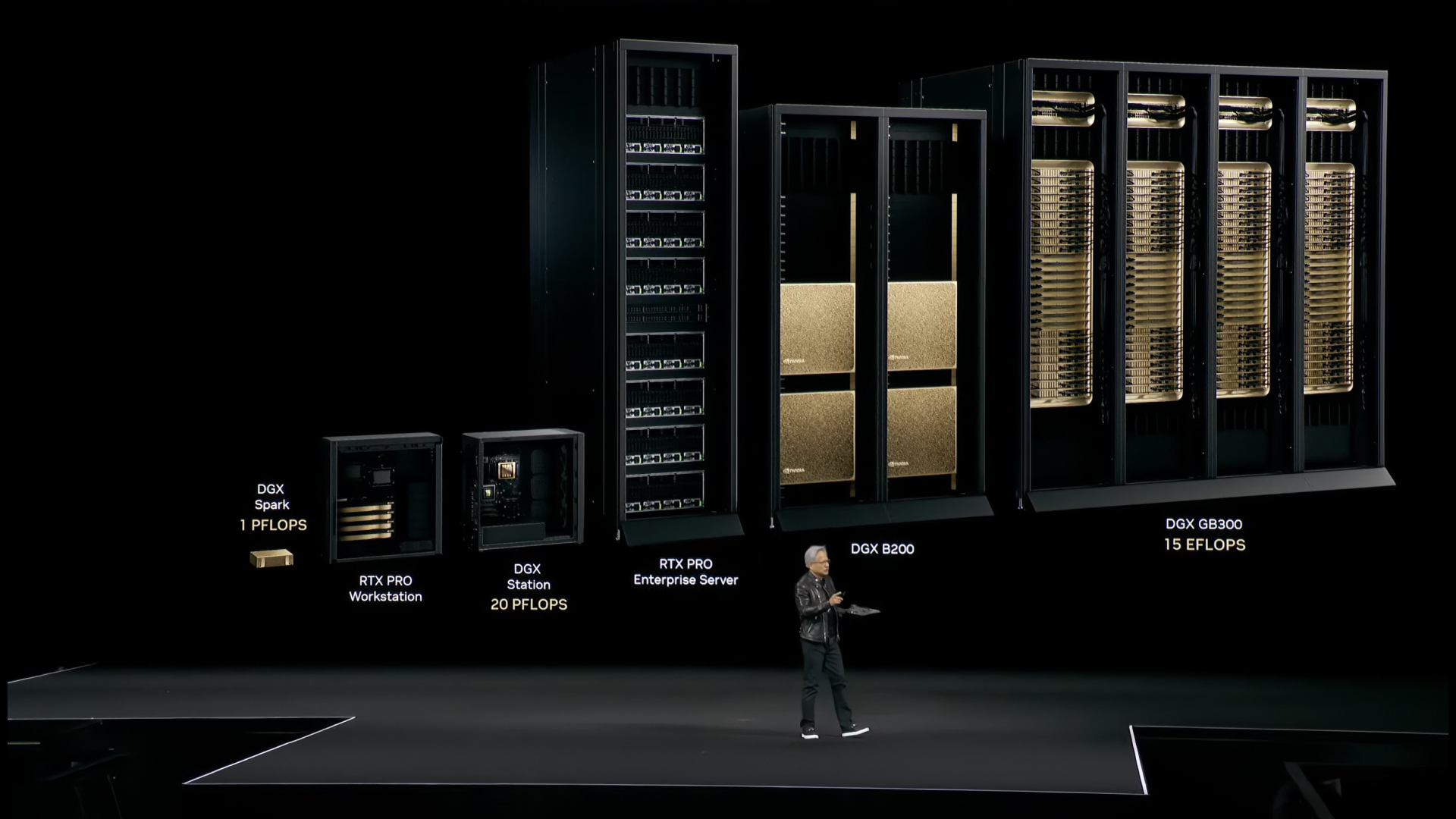

The Blackwell DGX are designed to provide computational power that was traditionally reserved for supercomputers and datacenters, but in a personal computer format that can be used in development and research environments. This represents a major innovation in the industry, allowing more professionals and teams to access higher-level computational capabilities without needing large infrastructure.

Each Blackwell DGX unit integrates NVIDIA Blackwell GPUs, paired with High Bandwidth Memory (HBM2) that offers higher bandwidths than GDDR memory, critical to powering AI workloads requiring high-speed memory access operations. The units are also equipped with AMD EPYC multi-core processors, optimized for parallel computing and high-performance operations, increasing overall system efficiency.

NVIDIA software and integration with AI tools

A key feature of the Blackwell DGX series is its complete integration with the NVIDIA AI Enterprise Suite, which includes a range of tools and software libraries to develop, run and optimize artificial intelligence applications. This software enables developers to take full advantage of the capabilities of Blackwell GPUs by streamlining machine learning, deep learning and data science workflows.

NVIDIA Triton Inference Server, a core component of the AI Enterprise Suite, enables optimization of AI inference performance by providing a flexible infrastructure to implement real-time AI models at scale. CUDA-X AI provides a series of specific libraries for scientific computing, deep learning and data analysis, making Blackwell DGXs ideal for applications ranging from medical research to physical simulation and industrial data analysis.

Another notable feature is the integration with NVIDIA Omniverse, a simulation application that allows the creation of collaborative virtual environments, useful for training AI agents and developing immersive applications in complex scenarios. The ability to easily integrate these tools into a complete ecosystem enables AI developers to optimize the entire application lifecycle, from prototyping to large-scale deployment.

Performance and scalability: the strength of parallel computing

The Blackwell DGX are designed to meet the most demanding challenges in terms of computational performance, thanks to their scalable architecture. Each system can be configured to support multiple NVIDIA Blackwell GPUs, connected via the high-speed NVLink network, allowing highly parallel configurations to run simulations or train massive AI models on complex data sets.

In addition, the Blackwell DGX is designed to integrate with multi-cloud and on-premise environments, providing additional flexibility in managing AI workloads. The ability to scale horizontally across multiple systems allows large organizations and research institutions to leverage entire GPU farms in parallel, reducing the time to train deep learning models and accelerating innovation in AI technologies.

Applications in innovative sectors

Blackwell DGX AI personal computer applications span a wide range of fields, including scientific research, applied artificial intelligence, industrial automation, health and biotechnology, and the automotive industry.

In the healthcare industry, for example, the parallel computing capabilities and inference speed offered by Blackwell DGX are essential to analyze huge volumes of data from medical devices, DNA sequencing or medical images. In the automotive sector, these systems are crucial for developing and training autonomous driving models, while in the field of physical simulation and scientific research, provide computational power that enables high-fidelity simulations in significantly faster times.

Conclusions: The new era of AI power at your fingertips

With the introduction of Blackwell DGX, NVIDIA has pushed the boundaries of technological innovation even further by making advanced AI computing power accessible in personal computer formats. These systems represent a turning point in the use of GPUs for high-performance computing, allowing researchers, developers and companies to harness computational power for applications that once required large datacenters or supercomputers.

The combination of state-of-the-art hardware, optimized software and advanced scalability makes the Blackwell DGX an ideal platform for next generation artificial intelligence, Opening new horizons in technological innovation and accelerating scientific and industrial progress.